pyproximal.optimization.primaldual.AdaptivePrimalDual#

- pyproximal.optimization.primaldual.AdaptivePrimalDual(proxf, proxg, A, x0, tau, mu, alpha=0.5, eta=0.95, s=1.0, delta=1.5, z=None, niter=10, tol=1e-10, callback=None, show=False)[source]#

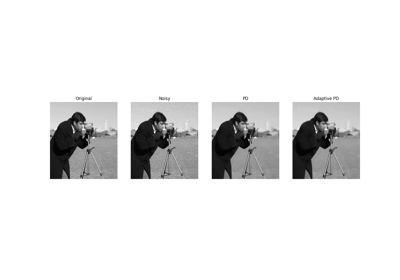

Adaptive Primal-dual algorithm

Solves the minimization problem in

pyproximal.optimization.primaldual.PrimalDualusing an adaptive version of the first-order primal-dual algorithm of [1]. The main advantage of this method is that step sizes \(\tau\) and \(\mu\) are changing through iterations, improving the overall speed of convergence of the algorithm.- Parameters

- proxf

pyproximal.ProxOperator Proximal operator of f function

- proxg

pyproximal.ProxOperator Proximal operator of g function

- A

pylops.LinearOperator Linear operator of g

- x0

numpy.ndarray Initial vector

- tau

float Stepsize of subgradient of \(f\)

- mu

float Stepsize of subgradient of \(g^*\)

- alpha

float, optional Initial adaptivity level (must be between 0 and 1)

- eta

float, optional Scaling of adaptivity level to be multipled to the current alpha every time the norm of the two residuals start to diverge (must be between 0 and 1)

- s

float, optional Scaling of residual balancing principle

- delta

float, optional Balancing factor. Step sizes are updated only when their ratio exceeds this value.

- z

numpy.ndarray, optional Additional vector

- niter

int, optional Number of iterations of iterative scheme

- tol

int, optional Tolerance on residual norms

- callback

callable, optional Function with signature (

callback(x)) to call after each iteration wherexis the current model vector- show

bool, optional Display iterations log

- proxf

- Returns

- x

numpy.ndarray Inverted model

- steps

tuple Tau, mu and alpha evolution through iterations

- x

Notes

The Adative Primal-dual algorithm share the the same iterations of the original

pyproximal.optimization.primaldual.PrimalDualsolver. The main difference lies in the fact that the step sizestauandmuare adaptively changed at each iteration leading to faster converge.Changes are applied by tracking the norm of the primal and dual residuals. When their mutual ratio increases beyond a certain treshold

deltathe step lenghts are updated to balance the minimization and maximization part of the overall optimization process.- 1

T., Goldstein, M., Li, X., Yuan, E., Esser, R., Baraniuk, “Adaptive Primal-Dual Hybrid Gradient Methods for Saddle-Point Problems”, ArXiv, 2013.